17 Nov 2015

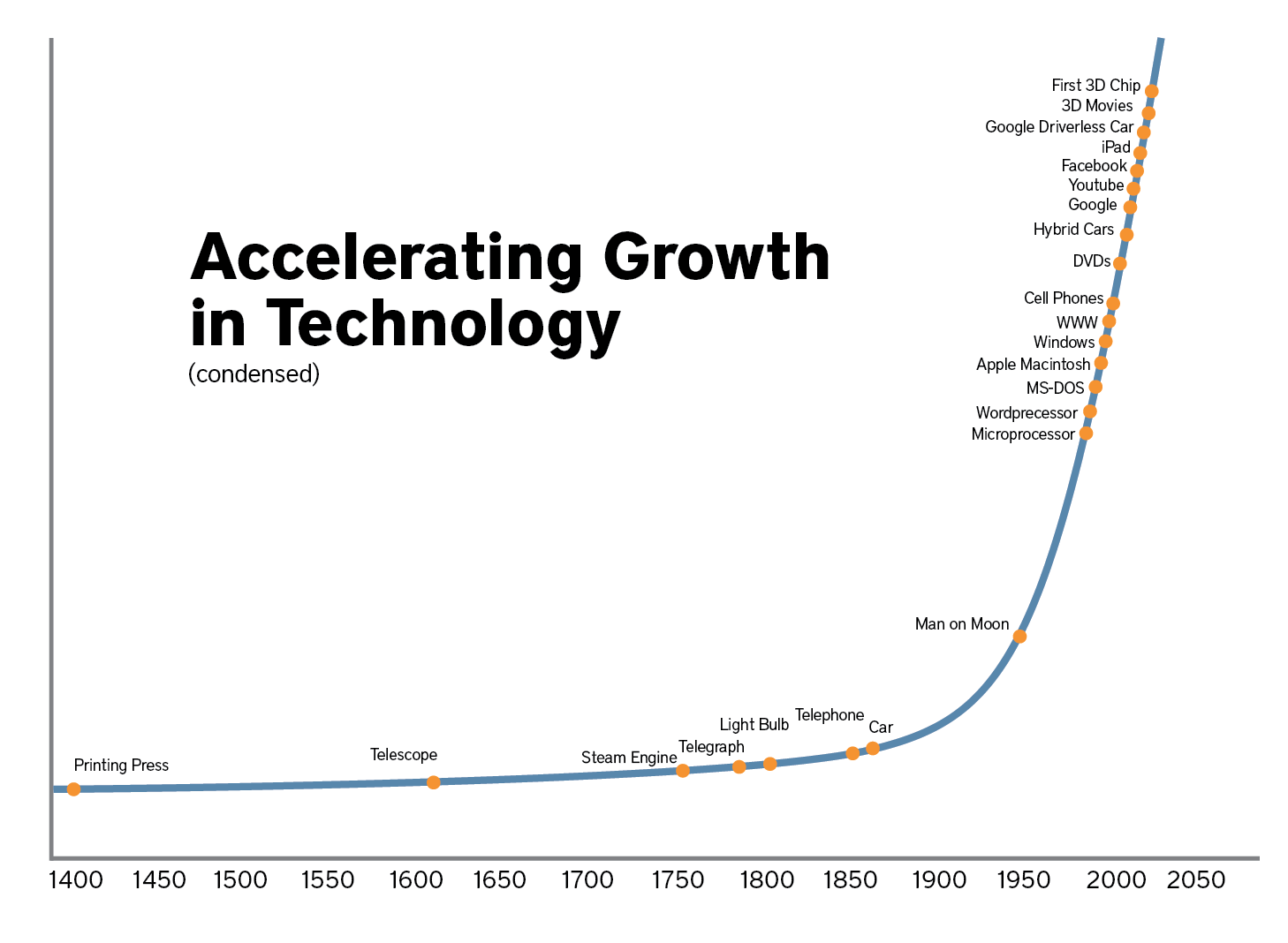

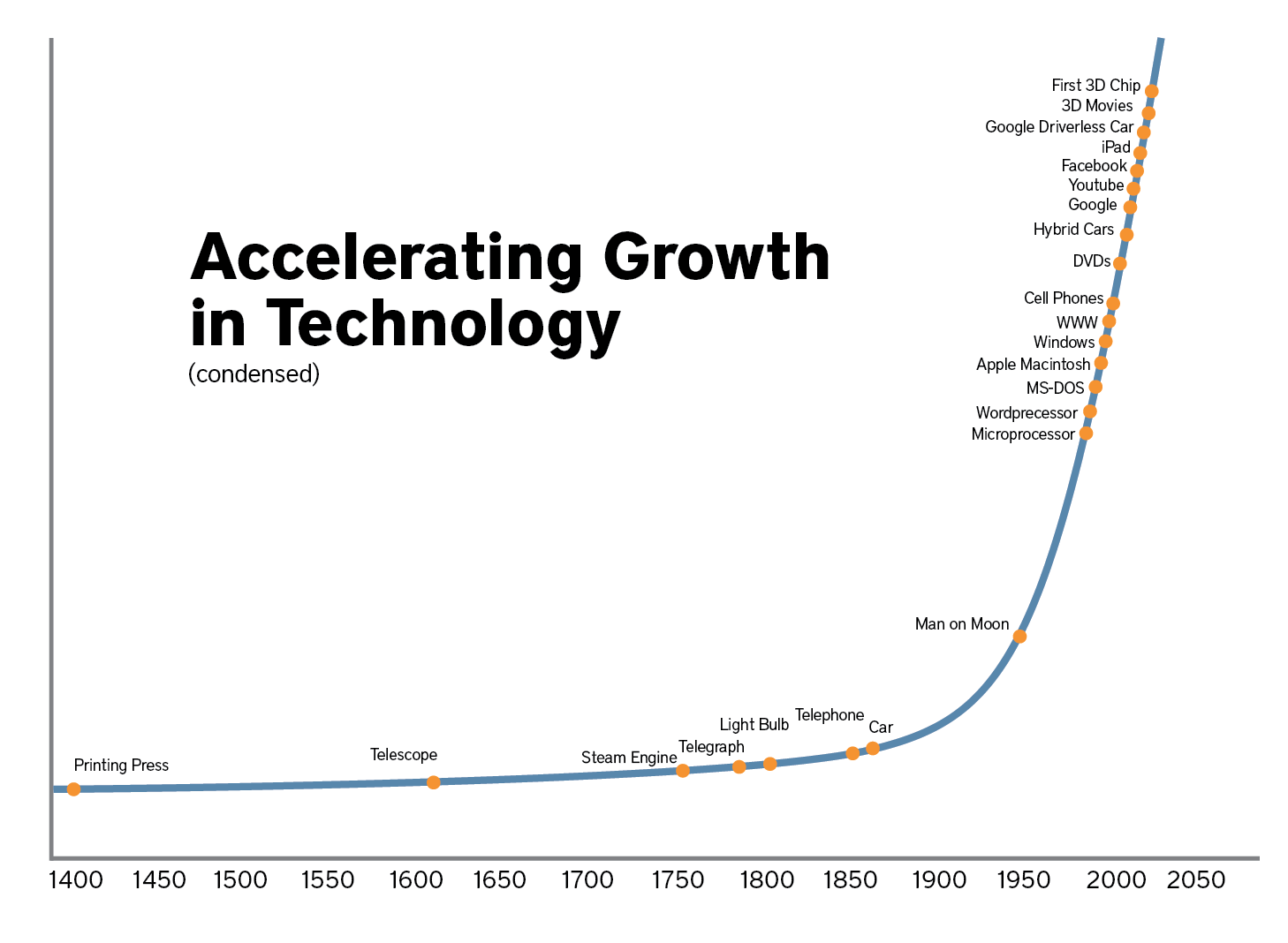

This weekend (Nov 14-15th) I spoke at Fullstack Toronto about the importance of providing autonomy, mastery, and purpose to our product development teams (designers, engineers, product managers, etc) as key ingredients of making them more productive and creative. Understanding how we can maximize our productivity is becoming increasingly important as the pace of innovation continues to accelerate. This means a rising factor in a firm's ability to compete over the long term is based on their ability to innovate and adapt to changes in the market at large.

The way we organize, interact, and ideate as individuals and groups directly impacts our ability to innovate by increasing the cost of change (reducing how much we can try) and reducing our ability to create (less engaged workers, etc). This is especially true in software development as it is fundamentally a team sport since a product is the culmination of a group working together over a period of time. It's the result of every conversation, debate, line of code written, designed iterated upon, and idea shared.

Technology and new insights in cognitive science are paving the way for a paradigm shift in the way we organize and approach work. From a top-down control model where the front-line workers have no authority and all the knowledge and responsibility. To a bottom -up approach where authority resides with those who have knowledge and responsibility while managers jobs are to define and articulate vision, build culture, and measure outcomes. New tools and methodologies like continuous integration and delivery, docker, and micro-services are enabling teams to focus on building platforms that maximize the autonomy of their dependents (other teams that bbuild onto of them in the organzation) by passing off control that aligns with their responsibilities.

New opportunities will emerge for both companies and individuals as we race to redefine the way we work together to maximize our productivity. Companies that cannot adapt in the long run will languish while those who can build and maintain a culture of innovation at scale will supersede them. Individuals inside these organizations will have an opportunity to help redefine internal culture as the companies look for leaders to help invert control (from the top to the bottom).

Slides

Resources

02 Oct 2015

Software development is hard, especially at scale with code in production. You are constantly trying to switch out the engines, support beams, and window panes of an airplane while it’s in-flight. We create processes for how we track issues, organize and submit code, and prevent regressions as a means of dealing with complexity. These are the rails that our organizations operate on so we don’t crash into the ground. A cornerstone of these processes is how history (why we made certain decisions) and direction (where we are going and why) are recorded, translated (between development, product, etc), and disseminated. Which are essential ingredients for teams and individuals to build incredible products autonomously with as little friction as possible.

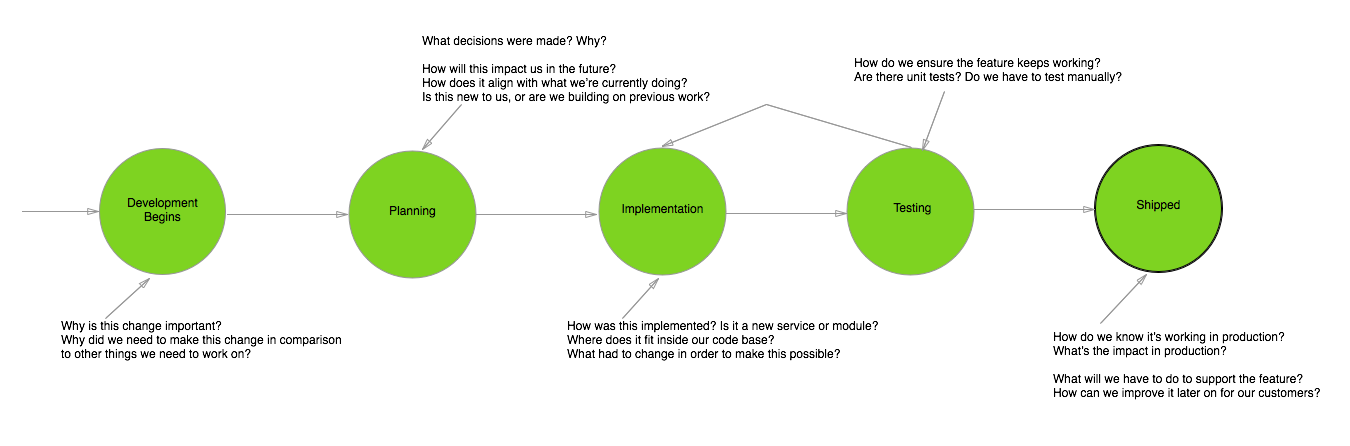

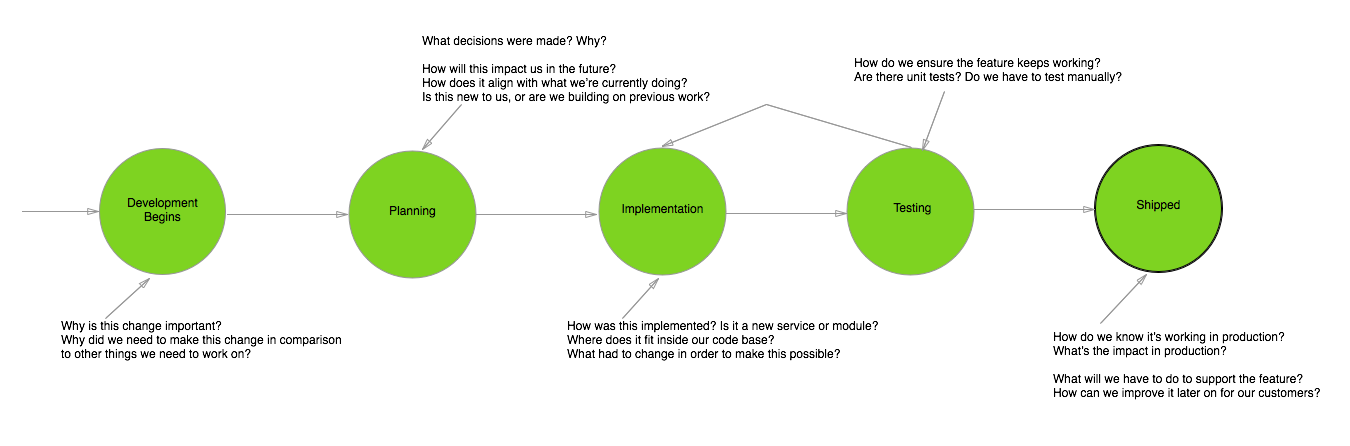

For a developer to make the most effective change to a code base they must understand how the system operates, what decisions led to that state, and why. It also means understanding how different components of the system are designed, tested, deployed, and behave in production. This knowledge is often times the by-product of the development process (illustrated below) and tends to be locked up into a few individual’s heads (the person who implemented the feature and perhaps the code reviewer).

At first glance, these questions seem trivial but this information will continually impact the cost of development (tech debt, supporting multiple systems or frameworks, etc). One example of this cost is ensuring that the system continues to behave as intended after a change has been made. A popular way we’ve dealt with this complexity is by writing automated tests that run after every change to ensure we haven’t regressed on any important behavior. What we’re really doing is recording how the system should behave so others, including computers, can leverage this knowledge.

Understanding our past allows us to make changes to the system and deliver them into production successfully. On top of this it helps shape our vision of how the system will work in the future. Which brings us to the second important component of any knowledge strategy: communicating the intended direction and creating alignment.

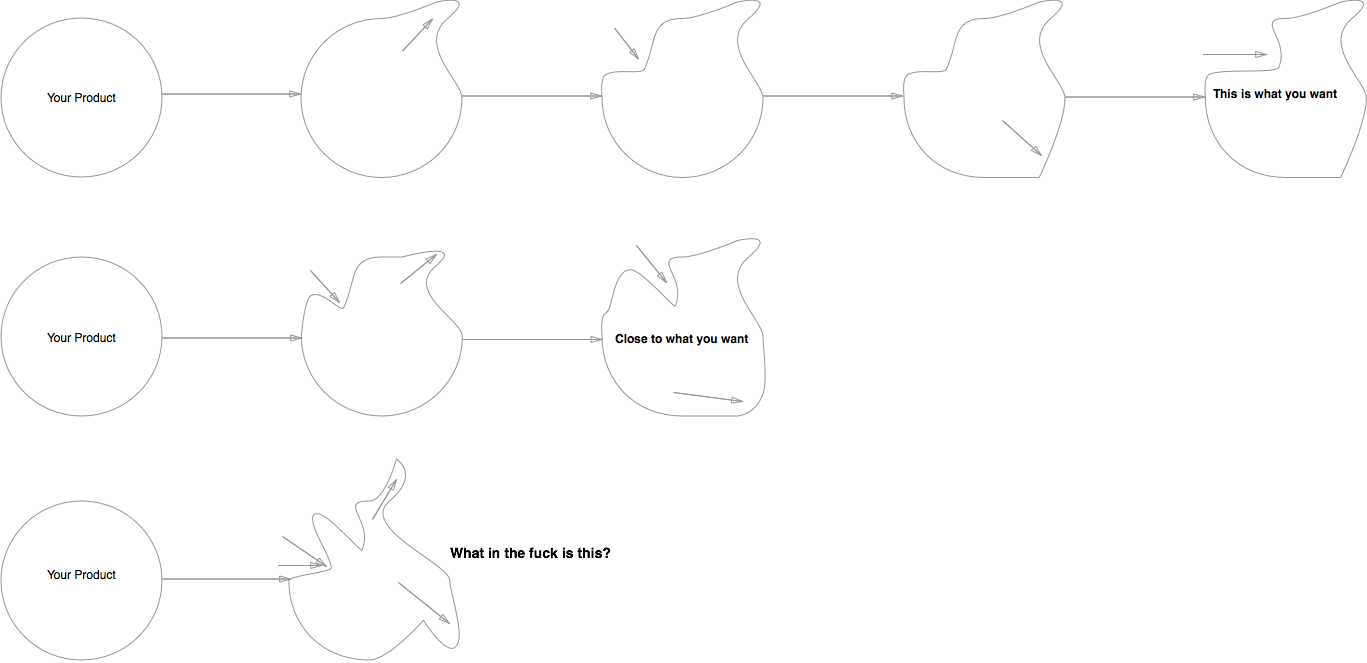

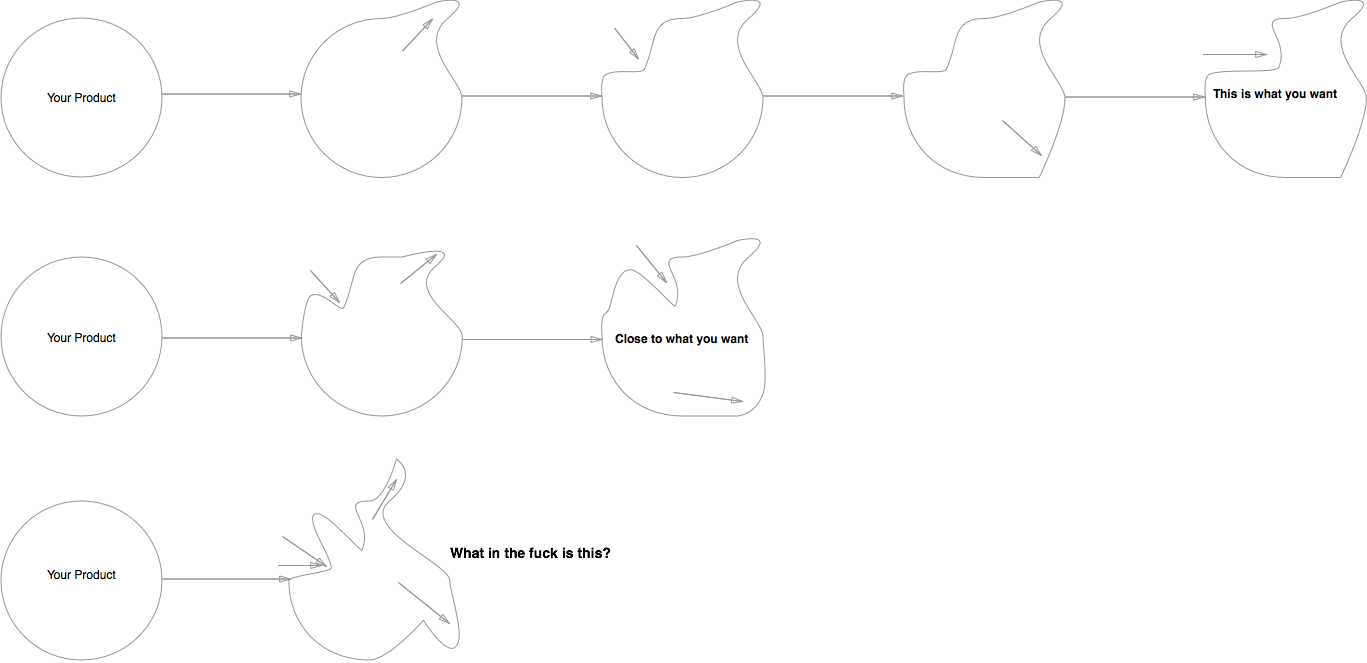

How and when should we be logging events? Are there certain patterns we should be emphasizing or avoiding? How and when should we be committing transactions? Should we be adding more responsibility to this module? These are the types of questions that if they go unanswered will quickly lead to a spaghetti code bases. The impact of lacking alignment is multiplied by the number of people working on the same code base (which I’ve illustrated below). This is a problem that becomes increasingly difficult to manage as the number of developers contributing to a code base increases.

While you may get to a place faster (because you can do more), it’s not necessarily what you want or need from the system. This is because developers tend to work autonomously (good) which enables you to parallelize the amount of work being done (also good). The problem is that they’re making changes with incomplete knowledge about where the system needs to go (bad). This tends to end with developers either stopping to ask questions (good and bad, they are no longer working in parallel and autonomously) or they fill in the gap with their own ideas (bad -- if your goal is architectural correctness and avoiding spaghetti code).

There are many ways to handle this problem such as having an “architect” role (which has mixed results) or some sort of upfront review process for changes that impact the system’s design (depending on the format this can work really well or it can be incredibly toxic). However, nothing is more important than frequent updates from leadership on the direction and priority of product, technology and engineering process. If these things are clear then developers are equipped with the best information about the road ahead and can make the most effective and efficient decisions about the changes they are implementing.

When and how you think about solving these problems is completely dependant on the size of your system and development team. If you are small, it’s easy to stay in sync and you make decisions quickly. As you grow, ensuring everyone has the right historical context and is aligned about where you’re going becomes incredibly difficult. Which can lead to amassed technical debt, a spaghetti code base, and hardened knowledge silos (only X knows how Y works). Ensuring you are building the right processes to record and distribute this knowledge will help you to navigate these challenges and allow you to keep delivering incredible products.

14 Mar 2015

Docker Powered Development Environment for Your Go Web App Want to get started with Go and develop like the cool kids? I’m going to walk you through getting setup with a docker powered development environment that rebuilds as you make modifications locally. A complete example is available on GitHub for those that want to skip a-head.

The advantages of using a tool like Docker is that it provides you with a simple way to create a repeatable, shareable and deterministic development environment. This becomes increasingly valuable as you scale the number of people working on a project.

On top of this a lot of cloud vendors now allow you to directly upload and run docker containers making it easier to maintain consistency between production and development environments.

Step One: Dependencies

First of all you’ll need to download all the dependencies. Fortunately, I’ve listed them below with links to guides on how to install them.

NOTE: Mac OS X users will need to install Boot2Docker which is a wrapper around VirtualBox that provides a Linux guest to run containers. Instructions are provided in the Mac OS X link above.

Step Two: The Little HTTP Server that Could

Once we’ve got all of our tools, we can write our sample application that we’ll be containerizing with docker.

First we’ll need a place for our code to live.

# Create a directory for our example app and then make it our

# working directory.

mkdir example-app

cd example-app

Now open a file called main.go with your favourite text editor.

package main

import (

"os"

"log"

"fmt"

"net/http"

)

func handler (w http.ResponseWriter, r *http.Request) {

log.Println("Received Request: ", r.URL.Path)

fmt.Sprintf(w, "Hello World!")

}

func main () {

http.HandleFunc("/", handler)

port := os.Getenv("PORT")

if port == "" {

log.Fatal("PORT environment variable was not set")

}

err := http.ListenAndServe(":8080", nil)

if err != nil {

log.Fatal("Could not listen: ", err)

}

}

You should be able to build and run this application.

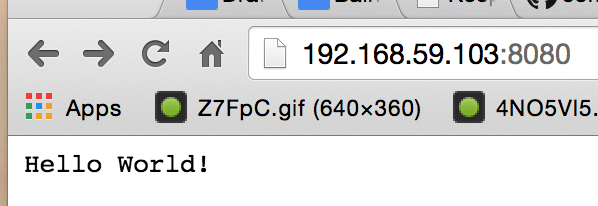

You can test the application by visiting http://localhost:8080 in your web browser. You should see “Hello World!” with log lines appearing in your console.

Step Three: Your first Dockerfile

It’s time to write your first Dockerfile (a file that tells docker how to build your container).

Create a Dockerfile in your example-app directory by opening it in your favourite text editor and entering the following code block. The in-line comments explain the purpose of each stanza.

# Base this docker container off the official golang docker image.

# Docker containers inherit everything from their base.

FROM golang:1.4.2

# Create a directory inside the container to store all our application and then make it the working directory.

RUN mkdir -p /go/src/example-app

WORKDIR /go/src/example-app

# Copy the example-app directory (where the Dockerfile lives) into the container.

COPY . /go/src/example-app

# Download and install any required third party dependencies into the container.

RUN go-wrapper download

RUN go-wrapper install

# Set the PORT environment variable inside the container

ENV PORT 8080

# Expose port 8080 to the host so we can access our application

EXPOSE 8080

# Now tell Docker what command to run when the container starts

CMD ["go-wrapper", "run"]

Now you should be able to build and run the container.

NOTE: If you are running on Mac OS X you’ll need to start boot2docker using the start command. It will print out a list of environment variables. Make sure to export these to your shell session.

# Build the docker image for our application, remove any

# intermediate steps and tag the result as "example-app"

docker build --rm -t example-app .

# Now that we have an image we can create and start a container,

# which is an instance of an image. This container will forward

# port 8080 from the container to the host.

#

# It will have the name test and is based off the "example-app"

# tag. Finally, we'll run it in detached mode (backgrounded).

docker run -p 8080:8080 --name="test" -d example-app

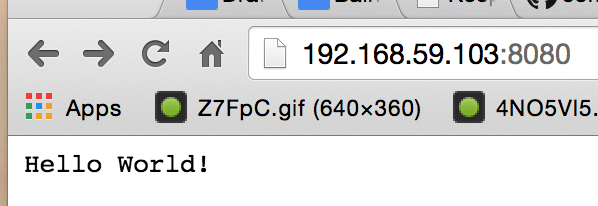

You should now be able to view the hello world application in your web browser by navigating to http://localhost:8080.

NOTE: If you’re running on Mac OS X, the docker container is running inside the Linux Guest. By default this should be http://192.168.59.103:8080, otherwise run the boot2docker ip command to get the guests ip address.

Congratulations, you now have a containerized Go development environment that is repeatable and deterministic.

You can now use docker to start, stop and check the status of your container using the following commands.

# List all containers (start and stopped)

docker ps -a

# Start a container using it's id (retrieved using above cmd)

docker start $CONTAINER_ID

# Stop a container using it's id (retrieved using above cmd)

docker stop $CONTAINER_ID

Step Four: Auto-Rebuild

It really sucks having to rebuild your docker image, stop the old one, and run the new one every time you make code changes. So we’re going to leverage docker volumes and a neat little tool called Gin to make this a non-problem.

Volumes provide us with a mechanism for sharing a folder between the host and container. This way you won’t have to rebuild to propagate changes inside the container.

Gin is a tool that detects if changes have been made to any source files and then rebuilds the binary and runs the process. At it’s core is a HTTP Proxy that is responsible for triggering the build when a new HTTP request is made and changes have been detected.

First we’ll have to make some simple changes to our Dockerfile by installing Gin and ensuring we execute Gin as our entry command. One major difference is that we’re now exposing port 3000 instead of 8080 as the Gin proxy will listen externally on 3000 and forward the traffic internally to port 8080 (the port our web application listens on).

# Base this docker container off the official golang docker image.

# Docker containers inherit everything from their base.

FROM golang:1.4.2

# Create a directory inside the container to store all our application and then make it the working directory.

RUN mkdir -p /go/src/example-app

WORKDIR /go/src/example-app

# Copy the example-app directory (where the Dockerfile lives) into the container.

COPY . /go/src/example-app

# Download and install any required third party dependencies into the container.

RUN go get github.com/codegangsta/gin

RUN go-wrapper download

RUN go-wrapper install

# Set the PORT environment variable inside the container

ENV PORT 8080

# Expose port 3000 to the host so we can access the gin proxy

EXPOSE 3000

# Now tell Docker what command to run when the container starts

CMD gin run

Alright, now we can build the image and start a container. However, this time we’re going to be leveraging docker volumes and exposing a different port.

# Build the image just as we did before (--rm writes over previous versions)

docker build --rm -t example-app .

# Expose port 3000 and link our CWD to our app folder in the

# container. Your current working directory will need to be the

# root of the example-app directory.

docker run -p 3000:3000 -v `pwd`:/go/src/example-app --name="test" -d example-app

NOTE: If you are a Mac OS X user your application directory will need to be a child of /Users. For more information, please read the volume documentation.

You should now be able view your application at http://localhost:3000. If you make any changes the application should rebuild the next time you refresh your view.

There you have it, your very own containerized development environment for your web application written in Go!

25 Jan 2015

When you’re building something and reach a major milestone (such as solving a complex technical problem, overcoming issues of scale, or solving an elusive bug) it gives you and your team the energy to keep pushing forward; everything suddenly seems easier. Everyone gets the feeling that no matter what problems lie ahead they’re manageable. You and your team feel like an unstoppable force manifesting your own destiny to do something incredible.

However these types of wins are typically few and far between. As day-to-day reality sinks back in, the boundless energy and feeling of being able to surmount any challenge fades. For a short period of time everyone was energized, aligned, and motivated because they felt the momentum behind what you were trying to accomplish. If only you could capture that feeling on a more constant basis. So the challenge becomes: how do you build and maintain momentum? Because at the end of the day, that feeling of progress is one of the main driving forces behind why people will continue to dedicate themselves to the cause.

This is where breaking down what you’re trying to accomplish into small deliverable units becomes incredibly valuable. It provides you with a logical point to step back and assess progress, gather feedback, and determine the next steps. By doing this you are also able to take time and celebrate, which creates a feeling of momentum.

My team and I celebrate accomplishments on an almost hourly basis. When a branch gets merged into the mainline of one of our git repositories, a sound of the team’s choosing plays throughout the main area of the office. This might sound a bit silly, but it’s a simple indicator of our progress and provides the individual with an acknowledgement that they’re getting shit done (which helps build a culture of doers).

On top of this, every second Friday we get together (with beer and popcorn) for an all-hands meeting where every team demos what they’ve been working on (anything that improves the product or improves our ability to build the product) for the past release (we follow a structured release process as we’re building a mobile product). Not only does it create a mechanism for holding people accountable, it also provides an opportunity to collect feedback and create alignment. At the end of the meeting, after every team has demoed, you can’t help but leave energized with a sense that we’re going to overcome any challenge that may present itself.

By focusing on the small things we’re able to build energy which we leverage to propel us towards our goals. We become an unstoppable train chugging down the tracks towards our destination, the only choice is whether you’re getting on or off.